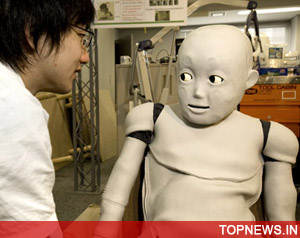

Humans can read robotic body language

London, Mar 23 : A robot's eyes may not be the windows to its soul, but they can certainly help humans guess the machine''s intentions, according to a new study.

London, Mar 23 : A robot's eyes may not be the windows to its soul, but they can certainly help humans guess the machine''s intentions, according to a new study.

Researchers led by Bilge Mutlu at Carnegie Mellon University, Pittsburgh, have demonstrated that robots "leak" non-verbal information through eye movements when interacting with humans.

Mutlu said that humans constantly give off non-verbal cues and interpret the signals of others, without realising it at a conscious level.

However, the interpretive skills become irrelevant with robots because they leak no information, making it virtually impossible to read their intentions.

The researchers tested strategies to improve robots' body language using a guessing game played by a human and a humanoid robot.

The robot is programmed to choose one object from around a dozen resting on a table, without making a move to actually pick it up.

The human must work out the object it has mentally selected, through a series of yes and no questions.

The researchers enrolled 26 participants in the study, who took on average 5.5 questions to work out the correct object when the robot simply sat motionless across the table and answered verbally.

In the second trial, it answered in exactly the same way, but also swivelled its eyes to glance at its chosen object in the brief pause before answering two of the first three questions.

The participants needed fewer questions to identify the correct object (an average of just 5.0 and a statistically significant result) when they came face to face with a robot "leaking" information.

When the experiment was repeated with the lifelike Geminoid with realistic rubbery skin, around three-quarters of participants said that they hadn''t noticed the short glances.

Mutlu said that the improvement in scores suggested that they subconsciously detected the signals, reports New Scientist magazine.

According to him, the study suggests that people make attributions of mental states to robots just like they do to humans, although apparently only as long as the robot appears to be lifelike.

Experts have said that simply giving robots the ability to turn towards a user or nod during a conversation are important for improving the efficiency and quality of human-robot interactions.

Mutlu presented his work at the Human Robot Interaction 2009 conference in La Jolla, California, last week. (ANI)